| Want to send this page or a link to a friend? Click on mail at the top of this window. |

More Special Reports |

| Posted February 6, 2006 |

| The New Science of |

| Lying |

| Brain mapping, E.R.P. waves and microexpression readings could make deception harder. But would that make us better? |

| By ROBIN MARANTZ HENIG |

|

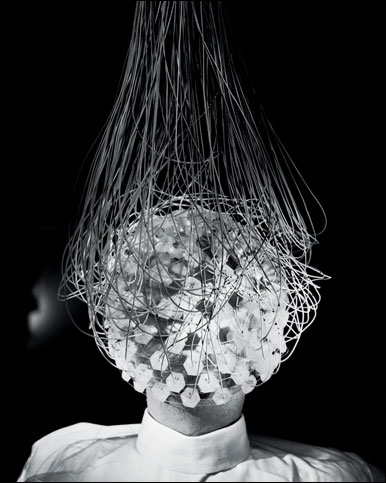

| Photographs by Larry Fink |

Scientists are using brain imaging and other tools as new kinds of lie detectors. |

But trickier even than finding the source of deception might be navigating a world without it. |

liars always look to the left, several friends say; liars always cover their mouths, says a man sitting next to me on a plane. Beliefs about how lying looks are plentiful and often contradictory: depending on whom you choose to believe, liars can be detected because they fidget a lot, hold very still, cross their legs, cross their arms, look up, look down, make eye contact or fail to make eye contact. Freud thought anyone could spot deception by paying close enough attention, since the liar, he wrote, "chatters with his finger-tips; betrayal oozes out of him at every pore." Nietzsche wrote that "the mouth may lie, but the face it makes nonetheless tells the truth."

This idea is still with us, the notion that liars are easy to spot. Just last month, Charles Bond, a psychologist at Texas Christian University, reported that among 2,520 adults surveyed in 63 countries, more than 70 percent believe that liars tend to avert their gazes. The majority also believe that liars squirm, stutter, touch or scratch themselves or tell longer stories than usual. The liar stereotype exists in just about every culture, Bond wrote, and its persistence "would be less puzzling if we had more reason to imagine that it was true." What is true, instead, is that there are as many ways to lie as there are liars; there's no such thing as a dead giveaway.

Most people think they're good at spotting liars, but studies show otherwise. A very small minority of people, probably fewer than 5 percent, seem to have some innate ability to sniff out deception with accuracy. But in general, even professional lie-catchers, like judges and customs officials, perform, when tested, at a level not much better than chance. In other words, even the experts would have been right almost as often if they had just flipped a coin.

In the middle of the war on terrorism, the federal government is not willing to settle for 50-50 odds. "Credibility assessment" is the new catch phrase, which emerged at about the same time as "red-level alert" and "homeland security." Unfortunately, most of the devices now available, like the polygraph, detect not the lie but anxiety about the lie. The polygraph measures physiological responses to stress, like increases in blood pressure, respiration rate and electrodermal skin response. So it can miss the most dangerous liars: the ones who don't care that they're lying, don't know that they're lying or have been trained to lie. It can also miss liars with nothing to lose if they're detected, the true believers willing to die for the cause.

Responding to federal research incentives, a handful of scientists are building a cognitive theory of deception to show what lying looks like — on a liar's face, in a liar's demeanor and, most important, in a liar's brain. The ultimate goal is a foolproof technology for deception detection: a brain signature of lying, something as visible and unambiguous as Pinocchio's nose.

Deception is a complex thing, evanescent and difficult to pin down; it's no accident that the poets describe it with diaphanous imagery like "tangled web" and "tissue of lies." But the federal push for a new device for credibility assessment leaves little room for complexity; the government is looking for a blunt instrument, a way to pick out black and white from among the duplicitous grays.

Nearly a century ago the modern polygraph started out as a machine in search of an application; it hung around for lack of anything better. But the polygraph has been mired in controversy for years, with no strong scientific theory to adequately explain why, or even whether, it works. If the premature introduction of a new machine is to be avoided this time around, the first step is to do something that was never done with the polygraph, to develop a theory of the neurobiology of deception. Two strands of scientific work are currently involved in this effort: brain mapping, which uses the 21st century's most sophisticated techniques for visualizing patterns of brain metabolism and electrical activity; and face reading, which uses tools that are positively prehistoric, the same two eyes used by our primate ancestors to spot a liar.

As these two strands, the ancient and the futuristic, contribute to a new generation of lie detectors, the challenge will be twofold: to resist pressure to introduce new technologies before they are adequately tested and to fight the overzealous use of these technologies in places where they do not belong — to keep inviolable that most private preserve of our ordinary lives, the place inside everyone's head where secrets reside.

| The Five-of-Clubs Lie |

The English language has 112 words for deception, according to one count, each with a different shade of meaning: collusion, fakery, malingering, self-deception, confabulation, prevarication, exaggeration, denial. Lies can be verbal or nonverbal, kindhearted or self-serving, devious or baldfaced; they can be lies of omission or lies of commission; they can be lies that undermine national security or lies that make a child feel better. And each type might involve a unique neural pathway.

To develop a theory of deception requires parsing the subject into its most basic components so it can be studied one element at a time. That's what Daniel Langleben has been doing at the University of Pennsylvania. Langleben, a psychiatrist, started an experiment on deception in 2000 with a simple design: a spontaneous yes-no lie using a deck of playing cards. His research involved taking brain images with a functional-M.R.I. scanner, a contraption not much bigger than a kayak but weighing 10 tons. Unlike a traditional M.R.I., which provides a picture of the brain's anatomy, the functional M.R.I. shows the brain in action. It takes a reading, every two to three seconds, of how much oxygen is being used throughout the brain, and that information is superimposed on an anatomical brain map to determine which regions are most active while performing a particular task.

There's very little about being in a functional-M.R.I. scanner that is natural: you are flat on your back, absolutely still, with your head immobilized by pillows and straps. The scanner makes a dreadful din, which headphones barely muffle. If you're part of an experiment, you might be given a device with buttons to press for "yes" or "no" and another device with a single panic button. Not only is the physical setup unnatural, but in most deception studies the experimental design is unnatural, too. It is difficult to replicate the real-world conditions of lying — the relationship between liar and target, the urgency not to get caught — in a functional-M.R.I. lab, or in any other kind of lab. But as an early step in mapping the lying brain, such artificiality has to suffice.

In Langleben's first deception study at Penn, the subjects were told at the beginning of the experiment to lie about a particular playing card, the five of clubs. To be sure the card carried no emotional weight, Langleben screened out compulsive gamblers from the group. One at a time, the subjects lay motionless in the scanner, watched pictures of playing cards flash onto a screen and pressed a button indicating whether they had that card or not. When an image of a card they didn't have came up, the subjects, as they had been instructed, told the truth and pressed "no." But when an image of the five of clubs came up, they also pressed "no," even though the card was in their pockets. That is, whenever they saw the five of clubs, they lied.

According to Langleben, certain regions of the brain were more active on average when his 18 subjects were lying than when they were telling the truth. Lying was associated with increased activity in several areas of the cortex, including the anterior cingulate cortex and the superior frontal gyrus. "We didn't have a map of deception in the brain — we still don't — so we didn't know exactly what this meant," Langleben said. "But that wasn't the question we were asking at the time in any case. What we were asking with that first experiment was, 'Can the difference in brain activity between lie and truth be detected by functional M.R.I.?' Our study showed that it can." He said that the prefrontal cortex — the reasoning part of the brain — was generally more aroused during lying than during truth-telling, an indication that it took more cognitive work to lie.

Brain mappers are just beginning to figure out how different parts of the brain function. The function of one region found to be activated in the five-of-clubs experiment, the anterior cingulate cortex, is still the subject of some debate; it is thought, among other things, to help a person choose between two conflicting responses, which makes it a logical place to look for a signature of deception. This region is also activated during the Stroop task, in which a series of words are written in different colors and the subject must respond with what color the ink is, disregarding the word itself. This is harder than it sounds, at least when the written word is a color word that is different from the ink it is written in. If the word "red" is written in blue, for instance, a lot of people say "red" instead of "blue." Telling a spontaneous lie is similar to the Stroop task in that it involves holding two things in mind simultaneously — in this case, the truth and the lie — and making a choice about which one to apply.

Langleben performed his card experiment again in 2003, with a few refinements, including giving his subjects the choice of two cards to lie about and whether to lie at all. This second study found activation in some of the same regions as the first, establishing a pattern of deception-related activity in particular parts of the cortex: one in the front, two on the sides and two in the back. The finding in the back, the parietal cortex, intrigued Langleben.

"At first I thought the parietal finding was a fluke," he said. The parietal cortex is usually activated during arousal of various kinds. It is also involved in the manifestation of thoughts as physical changes, like goose bumps that erupt when you're afraid, or sweating that increases when you lie. The connection to sweating interested Langleben, since sweating is also one of the polygraph's hallmark measurements. He looked at existing studies of this response, and in all of them he found activity that could be traced back to the parietal lobe. Until Langleben's observation of its connection to brain changes, the sweat response (which the polygraph measures with sensors on the palm or fingertips) had been thought to be a purely "downstream" change, a secondary effect caused not by the lie itself but by the consequences of lying: guilt, anxiety, fear or the excess positive emotion one researcher calls "duping delight." But Langleben's findings indicated that it might have a corollary "upstream," in the central nervous system. This meant that at least one polygraph measurement might have a signature right at the source of the lie, the brain itself.

So there it was: the first intimation of a Pinocchio response.

The parietal-cortex finding, while speculative, is "interesting to pay attention to because of its relationship to the polygraph," Langleben said. "In this way, we might not have to cancel the polygraph. We may be able to put it on firm neuroscience footing."

| One Lie Zone or Many |

Over at Harvard, Stephen Kosslyn, a psychologist, was looking at the map Langleben was starting to build and found himself troubled by the connection between deception and the anterior cingulate cortex. "Yes, it lights up during spontaneous lying," Kosslyn said, but it also lights up during other tasks, like the Stroop task, that have nothing to do with deception. "So it couldn't be the lie zone."

Deception "is a huge, multidimensional space," he said, "in which every combination of things matters." Kosslyn began by thinking about the different dimensions, the various ways that lies differ from one another in terms of how they are produced. Is the lie about you, or about someone else? Is it about something you did yesterday or something your friend plans to do tomorrow? Do you feel strongly about the lie? Are there serious consequences to getting caught? Each type of lie might lead to activation of particular parts of the brain, since each type involves its own set of neural processes.

He decided to compare the brain tracings for lies that are spontaneous, like those in Langleben's study, with those that are rehearsed. A spontaneous lie comes when a mother asks her teenage son, "Did you do your math homework?" A rehearsed lie comes when she asks him, "Why are you coming home an hour past your curfew?" The question about the homework probably surprises him, and he has to lie on the fly. The question about the curfew was probably one he had been anticipating, and concocting an answer to, for most of the previous hour.

Kosslyn's working hypothesis was that different brain networks are used during spontaneous lying than are used during truth-telling or the telling of a memorized lie. Spontaneous lying requires the liar not only to generate the lie and keep the lie in mind but also to keep in mind what the truth is, to avoid revealing it by mistake. In contrast, Kosslyn said, a rehearsed lie requires only that an individual retrieve the lie from memory, since the work of establishing a credible lie has already been done.

To help his subjects generate meaningful lies to memorize, Kosslyn asked them to provide details about one notable work experience and one vacation experience. Then he helped them construct what he called an "alternative-reality scenario" about one of them. (The other experience he held in reserve as the basis for his subject's unrehearsed spontaneous lies.) If the experience was a vacation in Miami, for instance, Kosslyn changed it to San Diego; if the person had gone there to visit a sister, he changed it to a visit to Uncle Sol. Kosslyn had the participants practice the false scenario for a few hours, and then he put them into a scanner at Harvard's functional-M.R.I. facility. There were 10 subjects altogether, all in their 20's.

As he predicted, Kosslyn found that as far as the brain was concerned, spontaneous and rehearsed lies were two different things. They both involved memory processing, but of different kinds of memories, which in turn activated different regions of the cortex: one part of the frontal lobe (involved in working memory) for the spontaneous lie; a different part in the right anterior frontal cortex (involved in retrieving episodic memory) for the lie that was rehearsed. That's not much of a map yet, but it is a cumulative movement toward a theory of deception: that lying involves different cognitive work than truth-telling and that it activates several regions in the cerebral cortex that are also activated during certain memory and thinking tasks.

Even as these small bits of data emerge through functional-M.R.I. imagery, however, Kosslyn remains skeptical about the brain-mapping enterprise as a whole. "If I'm right, and deception turns out to be not just one thing, we need to start pulling the bird apart by its joints and looking at the underlying systems involved," he said. A true understanding of deception requires a fuller knowledge of functions like memory, perception and visual imagery, he said, aspects of neuroscience investigations not directly related to deception at all.

Withe push for an automated lie, some observers worry that |

we'll see a replay of the polygraph experience: the marketing of a halfway technology |

not quite capable of seperating lying from other cognitive or emotional tasks. |

In Kosslyn's view, brain mapping and lie detection are two different things. The first is an academic exercise that might reveal some basic information about how the brain works, not only during lying but also during other high-level tasks; it uses whatever technology is available in the sophisticated neurophysiology lab. The second is a real-world enterprise, best accomplished not necessarily by using elaborate instruments but by encouraging people "to use their two eyes and brains." Searching for a "lie zone" of the brain as a counterterrorism strategy, he said, is like trying to get to the moon by climbing a tree. It feels as if you're getting somewhere because you're moving higher and higher. But then you get to the top of the tree, and there's nowhere else to go, and the moon is still hundreds of thousands of miles away. Better to have stayed on the ground and really figured out the problem before setting off on a path that looks like progress but is really nothing more than motion. Better, in this case, to discover what deception looks like in the brain by breaking it down into progressively smaller elements, no matter how artificial the setup and how tedious the process, before introducing a lie-detection device that doesn't really get you where you want to go.

| Your Brain Waves Know You're a Liar |

Even the most enthusiastic brain mappers probably agree with one aspect of Kosslyn's skeptical analysis: a true brain map of lying is, at best, elusive. Part of the difficulty comes from the technology itself. In the world of brain mapping, a functional-M.R.I. scan paints a picture that is broad and, in its way, lumbering. It can indicate which region of the brain is active, but it can take a reading no more frequently than once every two seconds.

For a more refined picture of cognitive change from one instant to the next, scientists have turned to the electroencephalogram, which detects neural impulses on the scale of milliseconds. But while EEG's might be ideal to answer "when" questions about brain activity, they are not so good at answering questions about "where." Most EEG's use 10 or 12 electrodes attached by a tacky glue at scattered spots on the scalp, which record electrical impulses firing from the brain as a whole. They give little indication of which region is doing the firing.

That's why deception researchers use a refined version of the ordinary EEG, which increases the number of electrodes from 12 to 128. These 128 electrodes, each the size of a typewriter key, are studded around a stretchy mesh cap. Using the cap, investigators can trace where electrical impulses are coming from when a person lies.

The cap is unwieldy and uncomfortable — definitely not ready yet for the world outside the laboratory. Jennifer Vendemia, a psychologist at the University of South Carolina, has been using the cap since 2000, when she began studying deception by looking at a particular class of brain wave known as E.R.P., for event-related potential. The E.R.P. wave represents electrical activity in response to a stimulus, usually 300 or 400 milliseconds after the stimulus is shown. It can be a sign that high-level cognitive processes, like paying attention and retrieving memories, are taking place.

Vendemia has studied deception and E.R.P. waves in 626 undergraduates. She outfits them with the electrode cap and a plastic barbershop cape, which is necessary because, in order to maintain an electrical circuit, each of the 128 electrodes has to be thoroughly soaked. The cap is sopping wet when she puts it on her subjects, and during the experiment Vendemia occasionally comes into the room with a squirter and soaks it down some more. Skip to next paragraph

Forum: Mental Health and Treatment Why do you lie? Vendemia presented her subjects with a series of true-false statements, like "The grass is green" and "A snake has 13 legs," which they were instructed to answer either truthfully or deceptively, depending on which color the statement was written in. The subjects took a longer time — up to 200 milliseconds longer, on average — to lie than to tell the truth. They revealed a change in certain E.R.P. waves while they were lying, especially in the regions of the brain that the functional-M.R.I. scanners also focused on as possible lie zones: the parietal and medial regions of the brain, along the top and middle of the head.

"E.R.P. has the advantage of being a little more portable, and substantially less expensive, than M.R.I.," Vendemia said. "But E.R.P. cannot do some of the things that functional M.R.I. can do. If you're trying to model the brain, you really need both techniques."

One thing E.R.P. might eventually be able to do is predict whether someone intends to lie — even before he or she has made a decision about it. This brings us into sci-fi territory, into the realm of mind reading. When Vendemia has a subject in an E.R.P. cap, she can detect the first brain-wave changes within 240 to 260 milliseconds after a true-false statement appears on a computer screen. But these changes are an indication of intention, not action; it can take 400 to 600 milliseconds for a person to decide whether to respond with "true" or "false." "With E.R.P., I've taken away your right to make a decision about your response," Vendemia said. "It's the ultimate invasion." If someone knows before you do what your brain is indicating as your intention, is there any room left, in that window of a few hundred milliseconds, for the exercise of free will? Or have you already been labeled a liar by your spontaneous brain waves, without your having a chance to override them and choose a different path?

|

|

|

| This refined version of the ordinary E.E.G. has 128 electrodes rather than 12 and may be able to pinpoint when lying occurs in the brain. | Sweating It Electrodes from a polygraph measure electrodermal chaanges that can be caused by lyiying. | Controversial There is little scientific evidence to support the use of polygraph tests, but they are still used to extract confessions. |

Lies make secrets possible; they let us carve out a private territory that no one, not even those closest to us, can enter without our permission. Without lies, there can be no such sanctuary, no interior life that is completely and inviolably ours. Do we want to allow anyone, whether a government interrogator or a beloved spouse, unfettered access to that interior life?

| How Lies Leak Into the Open |

Even a practiced lie-catcher like Paul Ekman recognizes that lying is a matter of privacy. "I don't use my ability to spot lies in my personal life," said Ekman, emeritus professor of psychology at the University of California, San Francisco. If his wife or two grown children want to lie to him, he said, that's their business: "They haven't given me the right to call them on their lies."

In his book "Telling Lies," Ekman underscored this point. His Facial Action Coding System, a precise categorization of the 10,000 or so expressions that are created by various combinations of 43 independent muscles in the face, allows him to do the same kind of mind reading that Vendemia can do with her E.R.P. cap. Facial expressions are hard-wired into the brain, according to Ekman, and can erupt without an individual's awareness about 200 milliseconds after a stimulus. Much like E.R.P. waves, then, a facial expression can give away your feelings before you are even aware of them, before you have made a conscious decision about whether to lie about those feelings or not. "Detecting clues to deceit is a presumption," Ekman wrote. "It takes without permission, despite the other person's wishes."

But in many situations, it's important to know who's lying to you, whether the liar wants you to or not. And for those times, Ekman said, his system of lie detection can be taught to anyone, with an accuracy rate of more than 95 percent. His holistic perspective is almost the polar opposite of brain mappers like Langleben's and Vendemia's: instead of focusing on the liar's neurons, Ekman takes a long, hard look at the liar's face.

The Facial Action Coding System is the key to Ekman's strategy. Basic emotions lead to characteristic facial expressions, which only a handful of really good liars manage to conceal. Part of lying is putting on a false face that's consistent with the lie. But even practiced liars, according to Ekman, may not always be able to control the "leakage" of their true feelings, which flit across the face in microexpressions that last less than half a second. These microexpressions indicate an incongruity between the liar's words and his emotions. "It doesn't mean he's lying necessarily," Ekman said. "It's what I call a 'hot spot,' a point of discontinuity that deserves investigation."

Ekman teaches police investigators, embassy officials and others how to spot liars, including how to read these microexpressions. He begins by showing photos of faces in apparently neutral poses. In each face, a microexpression appears for 40 milliseconds, and the trainee has to press a button to indicate which emotion was in that microexpression: fear, anger, surprise, happiness, sadness, contempt or disgust. When I took the pretest to measure my innate lie-detecting capabilities, I could see the microexpressions in about 70 percent of the examples. But after about 15 minutes of training, I improved. The training session let me stop the action if I missed a question, since Ekman's idea is that if you know what you're looking for — and the microexpressions, when frozen, are vivid and easy to name — you can spot them even when they flash by in an instant. In the post-training test, I scored an 86 percent.

In addition to microexpressions, Ekman said, certain aspects of a person's demeanor can indicate whether he is lying. Voice, hand movements, posture, speech patterns: when these vary from how the person usually speaks or gesticulates, or when they don't fit the situation, that's another hot spot to explore. Word choices often change with lying, too, with the speaker using "distancing language," like fewer first-person pronouns and more in the third person. Also common are what Ekman calls "verbal hedges," which liars might use to buy time as they figure out what they want to say. To illustrate a verbal hedge, Ekman pointed to one of the many cartoons he uses in his workshops: a shark standing in a courtroom, looking up at the judge and saying, "Define 'frenzy."'

Ekman enjoys using these insights to unmask the lies of public figures (though he has a rule that prohibits him from commenting on any elected official currently in office, no matter how tempting a target). At his home in the Oakland Hills, he has a videotape library of some of the most notable lies of recent history, and he showed me how to watch one when I visited last fall. It was from a presidential news conference in early 1998, during the first days of the Monica Lewinsky scandal. Ekman smiled as he watched it; he knows this clip well. "I want you to listen to me," President Bill Clinton was saying, shaking his forefinger like a schoolmarm. "I did not have sexual relations with that woman."

There it was: the president's "distancing language," calling Lewinsky "that woman," and an almost imperceptible softening of his voice at the end of the sentence. When this news conference was originally broadcast, Ekman said, "everyone I had ever trained from all over the country called me and said: 'Did you see the president? He's lying."'

Even though Ekman has been hired to teach his technique to embassy workers and military intelligence officers — to the tune of $35,000 for a five-day workshop — his low-tech approach to lie-catching is definitely out of vogue. "After 9/11," he said, "I contacted different federal agencies — the Defense Department, the C.I.A. — and said, 'I think there are some things I can teach your agents that can be of help right now."' But several turned him down, he said, with one person bluntly stating, "I can't support anything unless it ends in a machine doing it."

| The First, Flawed Machine |

The quest for such a machine has roots in the early 20th century, when the first modern lie detector, a rudimentary polygraph, was introduced. The man often cited as its inventor, William Moulton Marston, was a Harvard-trained psychologist who went on to make his mark as the creator of the comic-book character Wonder Woman. Not coincidentally, one of Wonder Woman's most potent weapons was her Magic Lasso, which made it impossible for anyone in its grip to tell a lie.

Marston spent 20 years trying to get his machine used by the military, in courts and even in advertising. After the success of Wonder Woman, however, he used it mostly for entertainment. His comic-book editor, Sheldon Mayer, recalled being hooked up to a polygraph during a party at Marston's home. After a few warm-up questions, Marston tossed him a zinger, "Do you think you're the greatest cartoonist in the world?"

As Mayer wrote in his memoir, "I felt I was being quite truthful when I said no, and it turned out I was lying!" What an interesting reaction — even if, as was likely, Mayer was just trying to be funny. Because how prescient, really, to joke that the machine must have been right, that the machine knew more about Mayer than he did himself. It's the power of a simple mechanical device to make you doubt your own concept of truth and lie — "It turned out I was lying" — that made the polygraph so alluring, and so disturbing. And it's that power, combined with the idea that the machines are peering directly into the brain, that makes the polygraph's modern counterparts even more so.

Today, the polygraph is the subject of much controversy, with organizations devoted to publicizing "countermeasures" — ways to subvert the results — to prove how unreliable it is. But the American Polygraph Association says it has "great probative value," and police departments still use it to help focus their criminal investigations and to try to extract confessions. The polygraph is also used to screen potential and current federal employees in law enforcement and for security clearances, although private employers are prohibited from using it as a pre-employment screen. Polygraphists are also routinely brought in to investigate such matters as insurance fraud, corporate theft and contested divorce.

But there is little scientific evidence to back up the accuracy of the polygraph. "There has been no serious effort in the U.S. government to develop the scientific basis for the psychophysiological detection of deception by any technique," stated a report issued by the National Research Council in 2003. Polygraph research has been "managed and supported by national security and law enforcement agencies that do not operate in a culture of science," the council said, suggesting that these are not the best settings for an objective assessment of any device's pros and cons.

'The idea that intelligence began in social manipulation deceit |

and cunning cooperation seems to explain everything we had always puzzled about,' wrote two primatologists. |

The polygraph has many cons. It requires a suspect who is cooperative, feels guilty or anxious about lying and hasn't been educated to the various countermeasures that can thwart the results. Polygraph results can be more reliable in investigations in which the questioners already know what they're looking for. This allows investigators to develop a line of questioning that leads to something like the Guilty Knowledge Test. This is a multiple-choice test in which the answer is something only a guilty person would know — and only a guilty person's polygraph readings would indicate arousal upon hearing it.

The history of polygraphs is a cautionary tale, an example of how not to introduce the next generation of credibility-assessment devices. "Security and law enforcement agencies need to improve their capability to independently evaluate claims proffered by advocates of new techniques for detecting deception," the National Research Council said. "The history of the polygraph makes clear that such agencies typically let clinical judgment outweigh scientific evidence."

| Thermal Scanners, Eye Trackers and Pupillometers |

History is in some danger of repeating itself at the site of the government's most focused effort to look for the next generation of lie detectors, the Department of Defense Polygraph Institute. This is where the brain mapping of the academic investigators is turned into practical machinery. Scientists at Dodpi (pronounced DOD-pie) are an inventive bunch, investigating instruments that measure the body's emission of heat, light, vibration or any other physiological properties that might change when someone tells a lie.

The Dodpi facility sits at one end of the huge Army base at Fort Jackson, S.C., where Army recruits en route to Iraq go for basic training. Among the new machines being studied is a thermal scanner, in which a computer image of a person's face is color-coded according to how much heat it emits. The region of interest, just inside each eye, grows hotter when a person lies. It also grows hotter during many other cognitive tasks, however, so a more specific signature for deception might be required to keep the thermal scanner from falling prey to the same problems of imprecision as the polygraph.

Another machine is the eye tracker, which follows a person's gaze — its fixation, duration, rapid eye movements and scanning path — to determine if he's looking at something he has seen before. It can be thought of as a mute version of the Guilty Knowledge Test.

Other high-tech deception detectors — many of them capable of remote operation, so they could theoretically be used without a suspect's knowledge — are being developed at laboratories across the country, with financing from agencies like Dodpi, the Department of Homeland Security and the Defense Advanced Research Projects Agency. (Defense Department officials will not reveal the amount they spend on credibility assessment, nor the degree to which the budget has increased since 9/11, because some of the research is classified.) The detectors look for increases in physiological processes that are associated with lying: a sniffer test that measures levels of stress hormones on the breath, for instance, a pupillometer that measures pupil dilation and a near-infrared-light beam that measures blood flow to the cerebral cortex.

With this push for an automated lie detector, some observers worry that we'll see a replay of the polygraph experience: the marketing of a halfway technology not quite capable of separating lying from other cognitive or emotional tasks. The polygraph was a machine in search of an application, and it became entrenched in criminal justice more out of habit than out of proved efficacy. This could easily happen again, as credibility assessment is being lauded as a crucial counterterrorism tool.

"The fear is that because so much money has been put into homeland security, that people may be trying to find quick solutions to complex problems by buying something," said Tom Zeffiro, a neurologist at Georgetown University and chairman of a workshop on high-tech credibility assessment sponsored last summer by the National Science Foundation. "And technology that might not be thoroughly evaluated might be put into practice." Already there are efforts to sell computer algorithms and devices that some scientists believe to be insufficiently tested, products with names like Brain Fingerprinting and No Lie M.R.I. Zeffiro said that one of his workshop suggestions is to establish a neutral testing laboratory to keep such products from being used commercially before there is at least some minimum amount of evidence that they work.

Big Brother concerns hover in the background, too, with some of these instruments, especially the smallest ones. It is sobering to think that we might be moving toward a society in which hidden sensors are trying, in one way or another, to read our minds. At Dodpi, however, scientists don't seem to fret much about such things. "The operational use of what we develop is not something we think about," said Andrew Ryan, a former police psychologist who is the head of research at Dodpi. "Our job is to develop the science. Once that science is developed, how it's used is up to other people."

| The Smudge of Ordinary Lies |

The Smudge of Ordinary Lies Each day we walk a fine line between deception and discretion. "Everybody lies," Mark Twain wrote, "every day; every hour; awake; asleep; in his dreams; in his joy; in his mourning." First there are the lies of omission. You go out to dinner with your sister and her handsome new boyfriend, and you find him obnoxious. When you and your sister discuss the evening later, isn't it a lie for you to talk about the restaurant and not about the boyfriend? What if you talk about his good looks and not about his offensive personality? Then there are the lies of commission, many of which are harmless, the lies that allow us to get along with one another. When you receive a gift you can't use, or are invited to lunch with a co-worker you dislike, you're likely to say, "Thank you, it's perfect" or "I wish I could, but I have a dentist's appointment," rather than speak the harsher truth. These are the lies we teach our children to tell; we call them manners. Even our automatic response of "Fine" to a neighbor's equally automatic "How are you?" is often, when you get right down to it, a lie.

| When Vendemia has a subject in an E.R.P. cap, she can detect brain-wave changes that are an indication of intention, not action. 'With E.R.P., I've taken away your right to make a decision about your response,' Vendemi says, 'It's the ultimate invasion.' |

More serious lies can have a range of motives and implications. They can be malicious, like lying about a rival's behavior in order to get him fired, or merely strategic, like not telling your wife about your mistress. Not every one of them is a lie that needs to be uncovered. "We humans are active, creative mammals who can represent what exists as if it did not, and what doesn't exist as if it did," wrote David Nyberg, a visiting scholar at Bowdoin College, in "The Varnished Truth." "Concealment, obliqueness, silence, outright lying — all help to hold Nemesis at bay; all help us abide too-large helpings of reality."

Learning to lie is an important part of maturation. What makes a child able to start telling lies, usually at about age 3 or 4, is that he has begun developing a theory of mind, the idea that what goes on in his head is different from what goes on in other people's heads. With his first lie to his mother, the power balance shifts imperceptibly: he now knows something she doesn't know. With each new lie, he gains a bit more power over the person who believes him. After a while, the ability to lie becomes just another part of his emotional landscape.

"Lying is just so ordinary, so much a part of our everyday lives and everyday conversations," that we hardly notice it, said Bella DePaulo, a psychologist at the University of California, Santa Barbara. "And in many cases it would be more difficult, challenging and stressful for people to tell the truth than to lie."

In the 1990's, DePaulo asked 147 people to keep a diary of their social interactions for one week and to note "any time you intentionally try to mislead someone," either verbally or nonverbally. At the end of the week, the subjects had lied, on average, 1.5 times a day. "Lied about where I had been," read a diary entry. "Said that I did not have change for a dollar." "Told him I had done poorly on my calculus homework when I had aced it." "Said I had been true to my girl."

People didn't feel guilty about these lies, by and large, but lying still left them with what DePaulo called a "smudge," a sort of smarmy feeling after lying. Her subjects reported feeling less positive about their interactions with people to whom they had lied than to people to whom they had not lied.

Still, DePaulo said that her research led her to believe that not all lying is bad, that it often serves a perfectly respectable purpose; in fact, it is sometimes a nobler, or at least kinder, option than telling the truth. "I call them kindhearted lies, the lies you tell to protect someone else's life or feelings," DePaulo said. A kindhearted lie is when a genetic counselor says nothing when she happens to find out, during a straightforward test for birth defects, that a man could not possibly have fathered his wife's new baby. It's when a neighbor lies about hiding a Jewish family in Nazi-occupied Poland. It's when a doctor tells a terminally ill patient that the new chemotherapy might work. And it's when a mother tells her daughter that nothing bad will ever happen to her.

"We found in our studies that these were the lies that women most often told to other women," DePaulo said. "Women are the ones saying, 'You did the right thing,' 'I know just how you feel,' 'That was a lovely dinner,' 'You look great.' I don't think they're doing that because they think the truth is unimportant or because they have a casual attitude toward lying. I think they just value their friends' feelings more than they value the truth."

If the search for an all-purpose lie detector were successful, and these everyday lies were uncovered along with the threatening or malicious ones, we might, paradoxically, end up feeling a little less safe than we felt before. It would be destabilizing indeed to be stripped of the half-truths and delusions on which social life depends.

| Does Lying Make Us Smarter |

Personally, I cannot tell a lie. This is not to say that I never lie; I'm just not very good at it. My lies are mostly lies of omission, secrets that I choose not to talk about at all, because when I do say something deceptive, people usually see right through me.

I realize that my honesty comes as much from ineptitude as from integrity. In fact, my own dirty little secret is that I wish I were a better liar; I think it would make me a more interesting person, maybe even a better writer. Still, when I told Paul Ekman that I hardly ever lie, I detected in my voice an unseemly amount of pride.

Ekman's response surprised me. He is, after all, one of the nation's leading experts on spotting liars; I expected him to nod sagely, approving of me and my truthful ways. Instead, he listened to my veiled boast with a patient little smile. Then, without missing a beat, he started enumerating the qualities that are required to lie. To lie, he told me, a person needs three things: to be able to think strategically and plan her moves ahead of time, like a good chess player; to read the needs of other people and put herself in their shoes, like a good therapist; and to manage her emotions, like a grown-up person.

So had this very nice, polite, accomplished man dissed me? Had Ekman told me, indirectly, that bad liars like me are immature, unempathetic and not especially bright? Had he pointed out that the skills of lying are the same skills involved in the best human social interactions?

Probably, (Would he tell me the truth if I asked him?) Deception is, after all, one trait associated with the evolution of higher intelligence. According to the Machiavellian Intelligence Hypothesis, developed by Richard Byrne and Andrew Whiten, two Scottish primatologists at the University of St. Andrews in Fife, the more social a species, the more intelligent it is. The hypothesis holds that as social interactions became more and more complex, our primate ancestors evolved so they could engage in the trickery, manipulation, skulduggery and sleight of hand needed to live in large social groups, which helped them avoid predators and survive.

All of a sudden, the idea that intelligence began in social manipulation, deceit and cunning cooperation seems to explain everything we had always puzzled about," Byrne and Whiten wrote. In 2004, Byrne and another colleague, Nadia Corp, looked at the brains and behavior of 18 primate species and found empirical support for the hypothesis: the bigger the neocortex, the more deceptive the behavior.

But even if liars are smarter and more successful than the rest of us, most people I know seem to be like me: secretly smug about their honesty, rarely admitting to telling lies. One notable exception is a friend who blurted out, when she heard I was writing this article, "I lie all the time."

Lying started early for this friend, she wrote later in an e-mail message. She grew up in a big, competitive family (14 siblings and step-siblings in all), and lying was the easiest way to get a word in edgewise. "There were so many of us," she wrote, "asserting knowledge became even more prized than the knowledge itself — because you were heard." If she didn't know something, she made it up. It got her siblings' attention.

These days, my friend, who is a novelist, claims that she lies almost reflexively. Maybe it gives her power to have information she's not sharing with her loved ones, she wrote; maybe it's just something left over from her childhood struggle for attention and from her writerly need to flex her imagination. "If I'm on the phone and my husband walks in and says, 'Who's that?' I might say Jill if it's Joan," she wrote to me. "Or if my mother asks me who I had lunch with, I'll tell her Caroline when it was Alice, for no good reason. This kind of useless lying is harmless except when I get caught — and I do get caught, because keeping track of useless lies is both daunting and exhausting."

My friend might be aware of getting caught, but habitual liars like her are probably harder to spot than neophytes like me. People who lie a lot are good at it, and they don't worry as much as I do about getting caught. And no matter what device or technique of lie detection is used, the liar who doesn't strain at her deception is still less likely to be fingered than the liar who does. In a recent study looking at the brain anatomy of pathological liars versus nonliars, researchers at the University of Southern California found that the liars had more white matter in their prefrontal cortexes. The investigators found their subjects partly through self-identification, an odd choice in a study of pathological liars. But it was an intriguing finding nonetheless. "White matter is pivotal to the connectivity and cognitive function of the human brain," Sean Spence, a deception researcher and psychiatrist at the University of Sheffield, wrote in an editorial accompanying the study's publication in the British Journal of Psychiatry last October. "And abnormal prefrontal white matter might affect complex behaviors such as deception."

As for my lying friend, she may or may not have an excess of white matter in the front of her brain. But she does lie, often. Her lies have no hidden purpose, she told me; she lies only for the sake of lying. "Of course," she added in her e-mail message, "I could be lying about all of this."

Today's federal effort to develop an efficient machine for credibility assessment has been compared to the Manhattan Project, the secret government undertaking to build the atomic bomb. This sounds hyperbolic, to compare a high-tech lie detector to a weapon of mass destruction, but Tom Zeffiro, who made the analogy, said that they raise similar moral quandaries, especially for the scientists doing the research. If a truly efficient lie detector could be developed, he said, we might find ourselves living in "a fundamentally different world than the one we live in today."

In the quest to make the country safer by looking for brain tracings of lies, it might turn out to be all but impossible to tell which tracings are signatures of truly dangerous lies and which are the images of lies that are harmless and kindhearted, or self-serving without being dangerous. As a result, we might find ourselves with instruments that can detect deception not only as an antiterrorism device but also in situations that have little to do with national security: job interviews, tax audits, classrooms, boardrooms, courtrooms, bedrooms.

This would be a problem. As the great physician-essayist Lewis Thomas once wrote, a foolproof lie-detection device would turn our quotidian lives upside down: "Before long, we would stop speaking to each other, television would be abolished as a habitual felon, politicians would be confined by house arrest and civilization would come to a standstill." It would be a mistake to bring such a device too rapidly to market, before considering what might happen not only if it didn't work — which is the kind of risk we're accustomed to thinking about — but also what might happen if it did. Worse than living in a world plagued by uncertainty, in which we can never know for sure who is lying to whom, might be to live in a world plagued by its opposite: certainty about where the lies are, thus forcing us to tell one another nothing but the truth. < Previous Page12345678910 Robin Marantz Henig, a contributing writer, is the author of "Pandora's Baby: How the First Test Tube Babies Sparked the Reproductive Revolution." Her most recent article for the magazine was about death.

Copyright 2006The New York Times Company. Reprinted from The New York Times Magazine of Sunday, February 5, 2006.

| Wehaitians.com, the scholarly journal of democracy and human rights |

| More from wehaitians.com |